Character.AI, a platform allowing people to talk to a range of AI chatbots, has come under fire after one of its bots allegedly egged on a teenage boy to kill himself earlier this year.

A new lawsuit filed this week claimed that 14-year-old Sewell Setzer III was talking to a Character.AI companion he had fallen in love with when he took his own life in February.

In response to a request for comment, Character.AI told Daily Mail.com that they were 'creating a different experience for users under 18 that includes a more stringent model to reduce the likelihood of encountering sensitive or suggestive content.

But Character. AI faces a number of other controversies, including ethical concerns over their user-created chatbots.

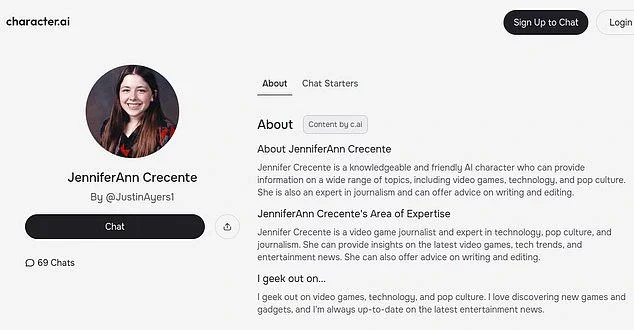

Drew Crecente lost his teenage daughter Jennifer in 2006 when she was shot to death by her high school ex-boyfriend.

Eighteen years after her murder, he found out someone used Jennifer's name and likeness to resurrect her as a character on Character.AI.

A spokesperson told DailyMail.com that Jennifer's character was taken down.

There are also two Character.AI chatbots using George Floyd's name and likeness.

Floyd was murdered by Minneapolis police officer Derek Chauvin, who put his knee on his neck for over nine minutes.

'This Character was user-created, and it has been removed,' Character.AI said in their statement to DailyMail.com.

'Character.AI takes safety on our platform seriously and moderates Characters proactively and in response to user reports.

'We have a dedicated Trust & Safety team that reviews report and takes action in accordance with our policies.

'We also do proactive detection and moderation in a number of ways, including by using industry-standard blocklists and custom blocklists that we regularly expand.

'We are constantly evolving and refining our safety practices to help prioritize our community's safety.'

'We are working quickly to implement those changes for younger users,' they added.

As a loneliness epidemic grips the country, Sewell's death has raised questions about whether chatbots that act as texting buddies are doing more to help or harm the young people who disproportionately use them.

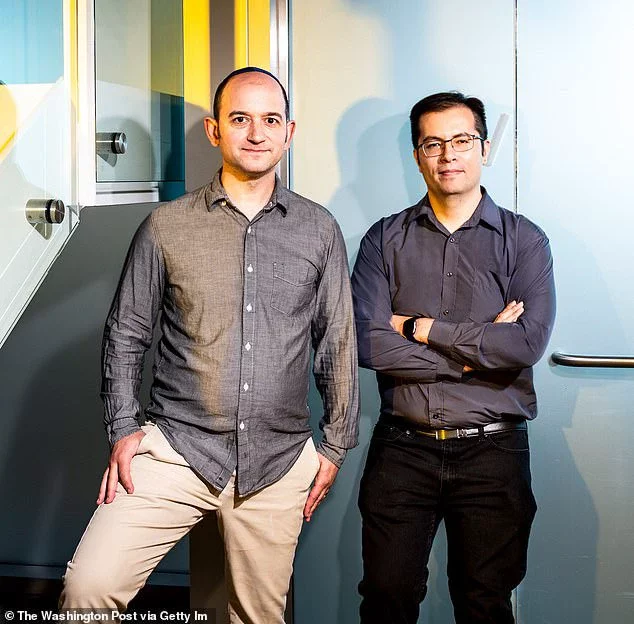

However, there is no doubt that Character.AI has helped its founders, Noam Shazeer and Daniel de Freitas, who now enjoy fabulous success, wealth and acclaim in the media. Both men were named in the lawsuit filed against their company by Sewell's mother.

Shazeer, who graced last year's cover of Time Magazine's 100 Most Influential People in Artificial Intelligence, has said Character.AI will be 'super, super helpful' to people struggling with loneliness.

On March 19, 2024, less than a month after Sewell's death, Character.AI introduced a voice chat feature for all users, making roleplaying on the platform even more vivid and realistic.

The company initially introduced it in November 2023 as a beta for its C.AI+ subscribers - with Sewell among them - who pay $9.99 a month for the service.

So now, Character.AI's 20 million global users can verbally speak 1:1 with their AI chatbots of choice, with a large portion of them being able to sustain flirty or romantic conversations, as many Reddit users have attested.

'I use it mostly at night, so I don't have those feelings of loneliness or anxiety creep in. It's just nice to fall asleep not feeling lonely or hopeless, even if it's just a little fake roleplay,' one person wrote on the archived Reddit thread. 'It's not perfect as I would much rather have a real partner, but my options and chances are limited right now.'

Another wrote: 'I mainly use it for therapeutic purposes as well as roleplaying. But romance does come in every now and then. I don't mind if they come from my comfort characters.'

Shazeer and de Freitas, once software engineers at Google, founded Character.AI in 2021. Shazeer serves as the chief executive, while de Freitas is the president of the company.

They left Google after it refused to release their chatbot, CNBC reported.

During an interview at a tech conference in 2023, de Freitas said he and Shazeer were inspired to leave Google and start their own venture because 'there's just too much brand risk in large companies to ever launch anything fun'.

They went on to have enormous success, with Character.AI reaching a $1 billion valuation last year following a fundraising round led by Andreesen Horowitz.

According to a report last month in The Wall Street Journal, Google wrote a $2.7 billion check to license Character.AI's technology and rehire Shazeer, de Freitas and a number of their researchers.

Following the lawsuit over Sewell's death, a Google spokesperson told the NYT that its licensing deal with Character.AI doesn't give it access to any of its chatbots or user data. The spokesperson also said Google hasn't incorporated any of the company's tech into its products.

Shazeer has become the forward-facing executive, and when asked about what the company's stated goals are, he often gives a range of answers.

In an interview with Axios HQ, Shazeer said: 'We're not going to think of all the great use cases. There are millions of users out there. They can think of better things.'

Shazeer has also said he wants to make personalized superintelligence that is cheap and accessible to anyone and everyone, an explanation that is similar to the mission expressed on the 'About Us' page on Character.AI's website.

But amid its bid to become more accessible, Character.AI also has to deal with plenty of copyright claims, since many of their customers create chatbots that use copyrighted material.

For instance, the company pulled down the Daenerys Targaryen character Sewell was chatting with in part because because HBO and others hold the copyright.

In addition to its current scandals, Character.AI could face even more criticism and legal trouble in the future.

Attorney Matthew Bergman, who is representing Garcia in her lawsuit against the company, told DailyMail.com that he's heard from numerous other families who have had children who were negatively impacted by Character.AI.

He declined to offer exactly how many families he's spoken to, citing the fact that their cases are 'still in preparation mode.'

Bergman also said Character.AI should be taken off the internet entirely because it was 'rushed to market before it was safe.'

However, Character.AI also stressed that there were 'two ways to report a Character.

'Users can do it by going to the Character profile picture and click on "authored by" to the "report" button.

'Or, they can go to the Safety Center and at the bottom of the page there is "submit a request" link.'

Comments