According to the report, Twitter failed to meet most of the criteria set by the organization regarding climate misinformation policies.

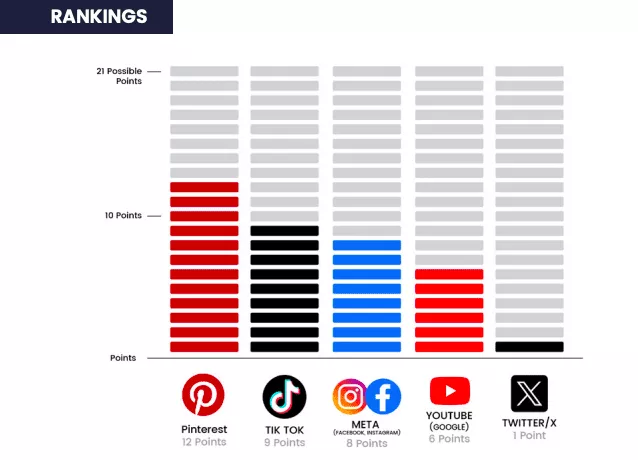

A recent report that ranks climate change misinformation has assigned Twitter (recently rebranded as X) the lowest score of just one point out of a possible 21. The single point is attributed solely to the platform's easily accessible and readable privacy policy.

The report, Climate Action Against Disinformation, aimed to assess the content moderation policies and efforts of major tech platforms such as Meta (formerly Facebook), Pinterest, YouTube, TikTok, and Twitter in combating inaccurate information, particularly climate denialism.

According to the report, Twitter failed to meet most of the criteria set by the organization regarding climate misinformation policies. These criteria included providing clear and publicly available information on climate science and having well-defined policies on addressing the spread of misinformation.

The report read:

Twitter/X received only one point-lacking clear policies that address climate misinformation, having no substantive public transparency mechanisms, and offering no evidence of effective policy enforcement.

The report pointed out the turmoil resulting from Elon Musk, the billionaire tech mogul, taking control of Twitter. This has led to uncertainty regarding the implementation of policies and content-related choices.

The report clarified that certain policies that were already in existence and could have been useful are no longer being upheld due to Elon Musk's acquisition and subsequent alterations to the policies.

Twitter/X lacks a concrete policy outline

Twitter/X lacks a policy specifically targeting user-generated climate misinformation content. Neither YouTube nor Twitter/X garnered recognition in this regard, as their advertising and user-generated content policies conspicuously lack explicit mentions of climate misinformation.

Notably, Twitter/X stands alone as the only platform not to receive credit for addressing this issue. Their Terms of Service omits any reference to misinformation in any manifestation, and guidance on reporting misinformation appears to be notably absent in many countries. Consequently, the overarching theme across these platforms is the lack of clarity in their policies regarding misinformation mitigation.

In essence, the absence of a dedicated policy addressing user-generated climate misinformation on Twitter/X raises concerns. Other platforms also fail to provide comprehensive coverage in their policies, thus highlighting the broader issue of unclear guidelines for tackling misinformation across social media platforms.

As misinformation on climate-related topics continues to be a pressing concern, these platforms must consider strengthening their policies and providing clear directives to promote accurate and responsible information dissemination. In doing so, they can play a vital role in combating the spread of climate misinformation and promoting informed discussions on critical environmental issues.

While the other platforms fared better, none received a significantly high score on the report. Pinterest received the highest score of 12 out of 21. The issues identified included a lack of clear definitions regarding climate misinformation, a failure to transparently enforce existing policies, and insufficient evidence that these policies are applied consistently across different languages. The report also noted that none of the companies publicly disclose how algorithmic changes impact climate misinformation.

Key Challenges

Tech platforms face several challenges when formulating effective content moderation policies. Some of the key challenges include:

Scale and Volume: The sheer scale and volume of content pose a significant challenge in identifying and moderating harmful or misleading information.

Contextual Understanding: Content moderation requires a nuanced understanding of context, intent, and cultural differences.

Speed and Timeliness: There is a constant race against time to detect and remove false or harmful content before it reaches a wider audience. Implementing efficient systems and processes to identify and address misinformation in real-time is a significant challenge.

Policy Consistency: Ensuring consistency in policy enforcement across diverse platforms and global user bases is challenging. Balancing these differences while maintaining a coherent and consistent approach to content moderation is a complex task.

Algorithmic Challenges: Many tech platforms rely on algorithms to assist with content moderation. However, algorithms can be prone to biases and false positives or negatives.

Community Guidelines and User Feedback: Platforms often face criticism for either being too permissive or overly restrictive in their content moderation decisions. Striking the right balance and effectively communicating guidelines to users is crucial.

Comments